This page covers information about the way audio is recorded and used in digital and anolog media. This section also goes over the way that digital files are created and encoded into various digital formats and the differences between the two types of audio codecs, Lossy and Lossless.

Analog vs Digital

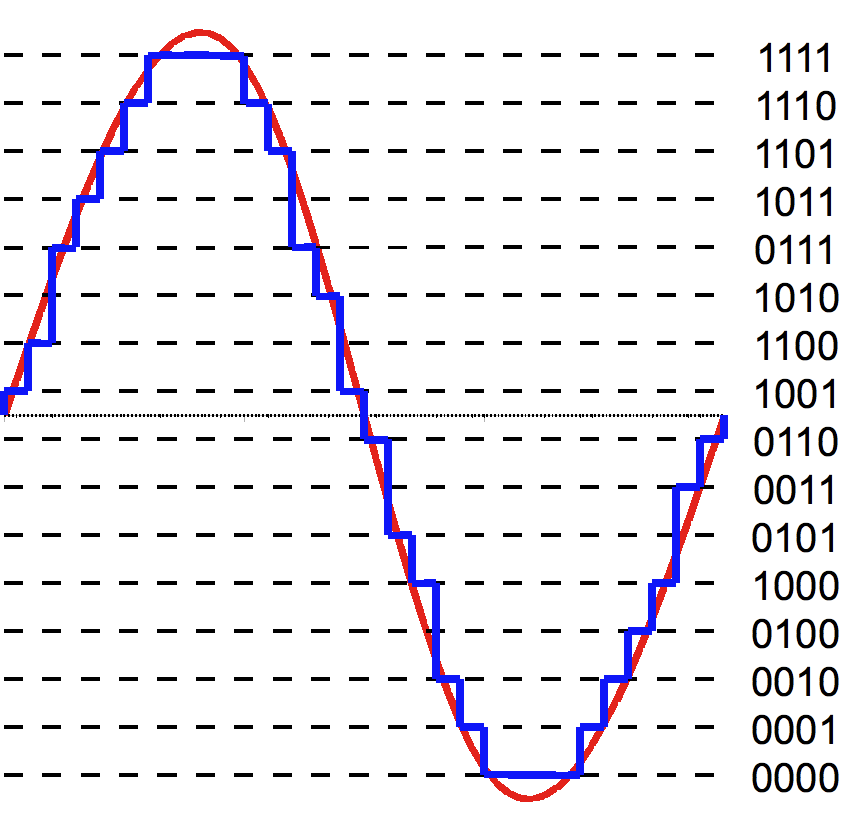

The best way to describe analog versus digital is that analog can be seen as having an infinite range of values, for example, if you wanted to find all the possible real numbers between 0 and 1, you’d find that there’s an infinite quantity of numbers to choose from. Digital refers to finding only the rational numbers that are relevant between 0 and 1, such as ½, ¾, ⅝, etc.

What does this mean in terms of audio?

- In terms of Analog, what we hear in person are continual sound waves that are meant to travel to our ears smoothly, picking up any frequencies that we can hear. Analog is meant to record all types of frequencies.

- Digital audio attempts to approximate what we are hearing by use of bit rate and fixed range of frequencies. It produces a sound wave that isn’t truly curvilinear, rather each frequency is broken up into blocks.

How was audio played before computers?

- Back then, there were two primary formats for analog audio: tapes and vinyl

- Tapes were the primary method of recording audio before the digital age. They involved applying a magnetic charge through a wire to a magnet, creating a magnetic field that gets transferred onto a strip of moving tape that sits close to the magnet. Two factors affect tapes: speed and width of the tape. The speed of the tape affects the quality while the width of the tape also allows for higher quality, but usually, wider tapes are used to store more audio tracks in one tape recording.

- Vinyls weren’t primarily used for recording audio, but merely for mass distribution. Vinyls use textured groves to store audio information which can then be played back using a vinyl player. The sound quality, while not as good as tapes, vinyl was easier to distribute, took up less space compared to some of the larger tapes, and was more durable. Tapes wear down over time while being exposed to other magnetic forces, whereas vinyl can last forever, provided they don’t get physically damaged.

How is audio recorded into a digital output

- PCM or Pulse Code Modulation is the most common method of taking audio signals and converting them into binary.

- PCM reads magnetic sound waves and stores a sequence of values at certain intervals that best match the amplitude of the wave.

- It finds these values using quantification which is essentially the process of taking an infinite range of values and mapping them to smaller sets of finite values, as discussed earlier.

- The quantification is the process performed by the Analog-to-Digitial Converter chip (ADC), which encodes the audio signal using voltage comparators to approximate the amplitude of the sound wave for every sample

- Each set of bits is known as “samples” which represent small parts of the audio.

- The range of amplitude values is determined by what’s referred to as bit depth. The more bits that are in a sample, the larger the range of sound, and this will reduce the amount of noise that gets created, or what is otherwise sounds that aren’t part of the music, but rather come from the electronic devices that you use to record and play music out of.

- Of course, like with all binary information, the higher the bit depth, the more information can be stored in a single block of data, or in this case, a sample. An 8-bit sample has a range of 255 possible amplitudes of a sound wave that it has to choose from. A 16-bit sample has 65,536 possible values, which is most commonly used in CD’s and .WAV files and such.

Sample Rate and The Nyquist-Shannon Theorem

The rate at which samples of a sound wave are played in a given second is called the sample rate. Sample rate is measured in Hertz (Hz) which is equivalent to making one full cycle (in a sine wave) per second.

What determines the sample rate?

- The general range of human hearing is around 20 – 20,000 Hz (or 20 Hz to 20 kHz). Due to this, our range of hearing is fairly limited compared to most other animals who can hear past 20 kHz.

- To properly sample a signal or sound wave without losing any information, according to the Nyquist-Shannon sampling theorem, one would need to sample it at a rate that is more than double the highest frequency present in the audio signal.

- Since the highest levels of frequency that we can hear only go up to 20 kHz, it’s required that the sample rate must be 40 kHz or higher so that no information gets lost in the final digital file.

Common sample rates

- Most popular sample rates go up to 44.1 kHz, which is used commonly in CD’s and MP3 files, and 48 kHz for professional digital video recorders

- Some files have sample rates of 96 kHz and even 192 kHz! While you might think that higher sample rates could improve the sound further, bear in mind that we can only hear frequencies from 20 Hz to 20,000 Hz, so this increase doesn’t do much when rates like 44.1 kHz do enough to accurately store the audio… so then, why do audio engineers do this?

- To put it simply, when frequencies that don’t fit into the size that the sampling rate allows, aliasing starts to occur. What could have been audio that sounded perfectly fine could sound off when played back. If an error occurred in the recording process or a frequency higher than the allowed Nyquist frequency, then this could introduce potential noise in our audio. That’s why the sample rate isn’t exactly 40 kHz, as you imagine it should be. If we think about our range of hearing and account for the Nyquist theorem, 40 kHz should be enough, right? They do this to leave some room for these higher frequencies so that they don’t mess with the final result.

- Listening at higher sample rates mostly pertains to audiophiles – hardcore audio listeners who want to be able to hear the sound with more fidelity, but to the common listener, a 48 or 44.1 kHz track and a 96 kHz track will sound the same out of a standard quality set of speakers or headphones.

Audio Compression and Format Types

- Audio files can get very large, very quickly, due to the bit depth and sample rate of a song, so it was much more difficult to be able to stream these uncompressed files back when the internet was first around compared to modern times.

- For example, a 24-bit sound file with a sample rate of 96 kHz is going to be around 2,304,000 bits per second (which is also equivalent to 288,000 bytes or 288 KB/s). Keep in mind that this is only for mono sound, which only comes from a single audio channel. We’re used to using stereo nowadays, which requires 2 channels – this means that double the information is needed for a 24-bit file in stereo. This means our total data rate is now at 576 KB/s which is a lot for just a single second of sound.

- To put that into perspective, if we had a 1 GB hard drive to hold songs on a portable music player, and each song was about 3 minutes long, we could only fit about 9 songs on a drive.

- The original iPod could only hold about 5 GB of storage, which would equate to 48 songs or approximately 2.4 hours of listening time. For most people, it was inconvenient, and at the time impractical, to store such high-quality songs on their music players.

- If you are curious about bitrates for other various file formats, a full list of file size calculations for PCM and MP3 formats can be found on AudioMountain.com. There you can read how large file sizes are per minute and hour. Keep in mind that, to account for stereo, the bitrates get doubled when calculating PCM bitrates, though it does show the mono bitrates as well.

- Every type of file, whether that be audio, video, vector, or raster images, uses some type of compression to save on storage space. They each have different methods for how each file decodes, encodes, reads, writes, etc. The main difference that sets most files apart is whether or not they are lossy or lossless

Lossy Data Compression uses algorithms to remove parts of the data that aren’t as important. In terms of music, this usually removes parts of the sound that sometimes get drowned out by other sounds that happen to be louder. However, this type of compression helps to decrease the size of audio files drastically.

In most cases, the drop in quality is insignificant, as the file gets decoded, some of the information is retained, but there will always be a net loss of information.

Popular Lossy Formats

- MP3, usually shortened from MPEG-1 Audio Layer 3, is the most widely known format that was revolutionized in the early days of digital music players and is supported on most modern devices. This format has a wide range of compression that can squeeze out a track that’s one-tenth its original size. MP3 is still used for music streaming services due to its smaller and easier-to-stream file sizes as well as its decent enough sound quality for the average listener. MP3 can only work up to 16-bit, it can’t work in 24 or 32-bit depths.

- AAC (Advanced Audio Coding, also referred to as MPEG-4 ACC) is a more modern version of MP3. They take up a lot less space, but can still retain much of the quality when decoded. This format is used by many popular streaming services such as YouTube and iTunes/Apple Music.

- OGG (Ogg Vorbis) is an open-source codec developed by Xiph.org that Spotify uses. Ogg Vorbis is similar in quality to an MP3 but can be compressed more efficiently and thus has better quality sound at a similar bitrate compared to an MP3 track.

- Lossless Data Compression uses algorithms that retain all of the information of the sound file so that the consumer doesn’t lose any information. However, the tradeoff is that the file reduction size is nowhere close to that of a lossy format. This is what most hardcore audio listeners use since it keeps whole songs intact when played back through programs.

- There aren’t as many of these types of codecs as lossy codecs, but the most widely used formats have been FLACs and ALACs

- FLAC (Free Lossless Audio Codec), also released by Xiph.org, is a free, open-source format that can reduce a PCM file to around half its total size.

- ALAC (Apple Lossless Audio Codec) was designed for use in Apple products and iTunes. When it was first launched in 2004, it was a proprietary codec, meaning you could only use it on Apple devices. Since 2011, it has been made open-source. It works fairly similarly to FLAC, reducing file size to around 40-60% of its original size.

- There aren’t as many of these types of codecs as lossy codecs, but the most widely used formats have been FLACs and ALACs

Lossless Data Compression uses algorithms that retain all of the information of the sound file so that the consumer doesn’t lose any information. However, the tradeoff is that the file reduction size is nowhere close to that of a lossy format. This is what most hardcore audio listeners use since it keeps whole songs intact when played back through programs.

There aren’t as many of these types of codecs as lossy codecs, but the most widely used formats have been FLACs and ALACs

- FLAC (Free Lossless Audio Codec), also released by Xiph.org, is a free, open-source format that can reduce a PCM file to around half its total size.

- ALAC (Apple Lossless Audio Codec) was designed for use in Apple products and iTunes. When it was first launched in 2004, it was a proprietary codec, meaning you could only use it on Apple devices. Since 2011, it has been made open-source. It works fairly similarly to FLAC, reducing file size to around 40-60% of its original size.

Sources used in this section:

Wikipedia, “Pulse-code Modulation:”: https://en.wikipedia.org/wiki/Pulse-code_modulation

Math Works, “What Is Quantization?” : https://www.mathworks.com/discovery/quantization.html

Electronics Tutorials, “Analogue to Digital Converter” : https://www.electronics-tutorials.ws/combination/analogue-to-digital-converter.html

Peter Elsea, “Basics of Digital Recording”, 1996 : http://artsites.ucsc.edu/EMS/Music/tech_background/TE-16/teces_16.html

Michel Kulhandjianl, Hovannes Kulhandjianl, Claude D’Amours, and Dimitris Pados, “Digital Recording System Identification Based on Blind Deconvolution”, 2019 : https://www.researchgate.net/publication/331979558_Digital_Recording_System

Wikipedia, “Sampling (signal processing)” : https://en.wikipedia.org/wiki/Sampling_(signal_processing)

Emiel Por, Maaike van Kooten, and Vanja SarKovic, “Nyquist-Shannon sampling theorem”, May, 2019 : https://home.strw.leidenuniv.nl/~por/AOT2019/docs/AOT_2019_Ex13_NyquistTheorem.pdf

Wikipedia, “Nyquist-Shannon sampling theorem” : https://en.wikipedia.org/wiki/Nyquist%E2%80%93Shannon_sampling_theorem

Christopher D’Ambrose, “Frequency Range of Human Hearing”, 2003 : https://hypertextbook.com/facts/2003/ChrisDAmbrose.shtml

Griffin Brown, Izotope, “Digital Audio Basics: Audio Sample Rate and Bit Depth”, May 9, 2021 : https://www.izotope.com/en/learn/digital-audio-basics-sample-rate-and-bit-depth.html

Wikipedia, “IPod Classic” : https://en.wikipedia.org/wiki/IPod_Classic

Adobe (UK site), “Lossy vs Lossless Compression: Differences and When to Use” : https://www.adobe.com/uk/creativecloud/photography/discover/lossy-vs-lossless.html

Khan Academy, Article title: “Lossy Compression” : https://www.khanacademy.org/computing/computers-and-internet/lossy-compression

Adobe, Webpage title: “Best Audio Format” : “https://www.adobe.com/creativecloud/video/discover/best-audio-format.html

Stephen Robles, “Lossy vs Lossless Audio Formats: How to Choose the Right One?”, Oct 17, 2023 : https://riverside.fm/blog/lossless-audio-formats

AudioMountain.com, “Audio File Size Calculations” : https://www.audiomountain.com/tech/audio-file-size.html

Wikipedia, “FLAC” : https://en.wikipedia.org/wiki/FLAC

Wikipedia, “Apple Lossless Audio Codec” : https://en.wikipedia.org/wiki/Apple_Lossless_Audio_Codec

Xiph.org : https://xiph.org/